Meta SAM 3D: Transforming Single Images Into 3D Reality

In November 2025, Meta unveiled SAM 3D, a breakthrough that brings human-level 3D understanding to everyday images. As part of the Segment Anything suite, SAM 3D turns a single 2D photo into a fully textured, realistic 3D model, no multi-camera imaging, depth sensors, or photogrammetry required.

Completely open-source and outperforming previous single-view 3D methods, SAM 3D dramatically lowers the barrier to creating high-quality 3D assets for research, creative production, and commercial applications.

What Makes SAM 3D Different?

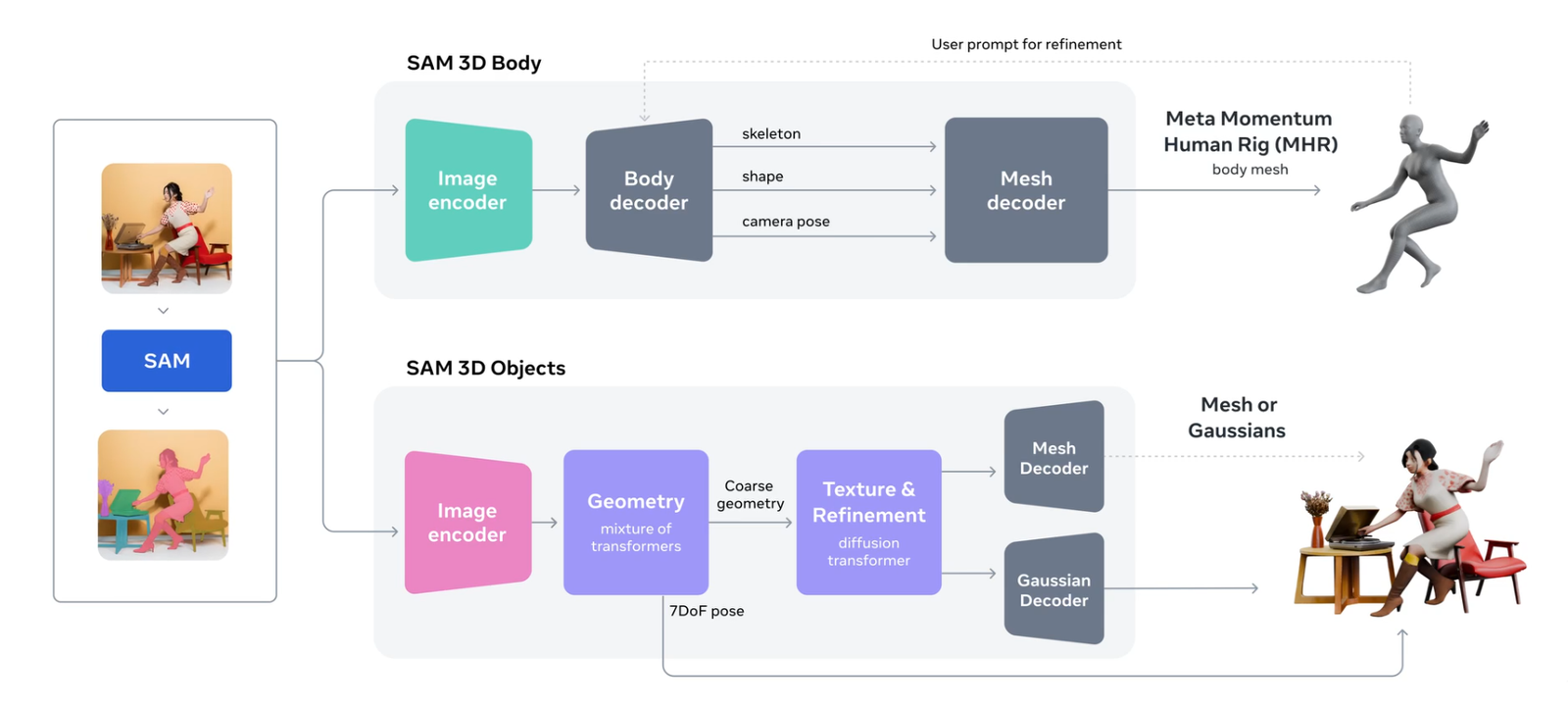

Two Specialised Models

SAM 3D Objects

Generates textured 3D meshes of objects or entire scenes

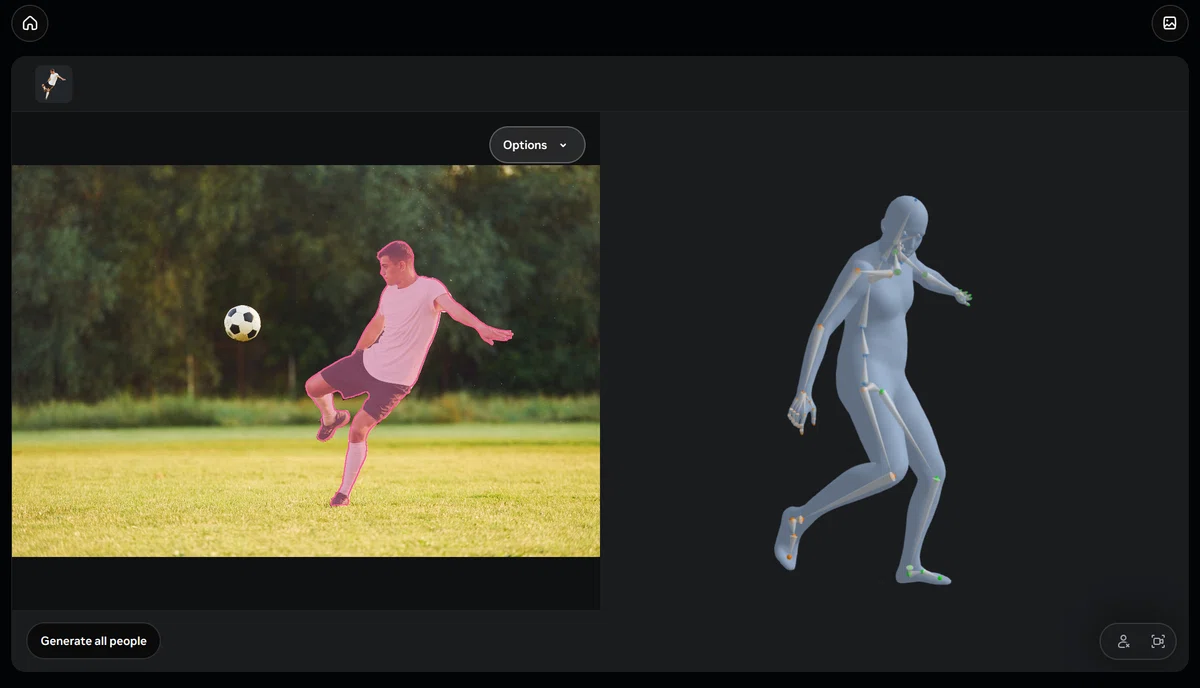

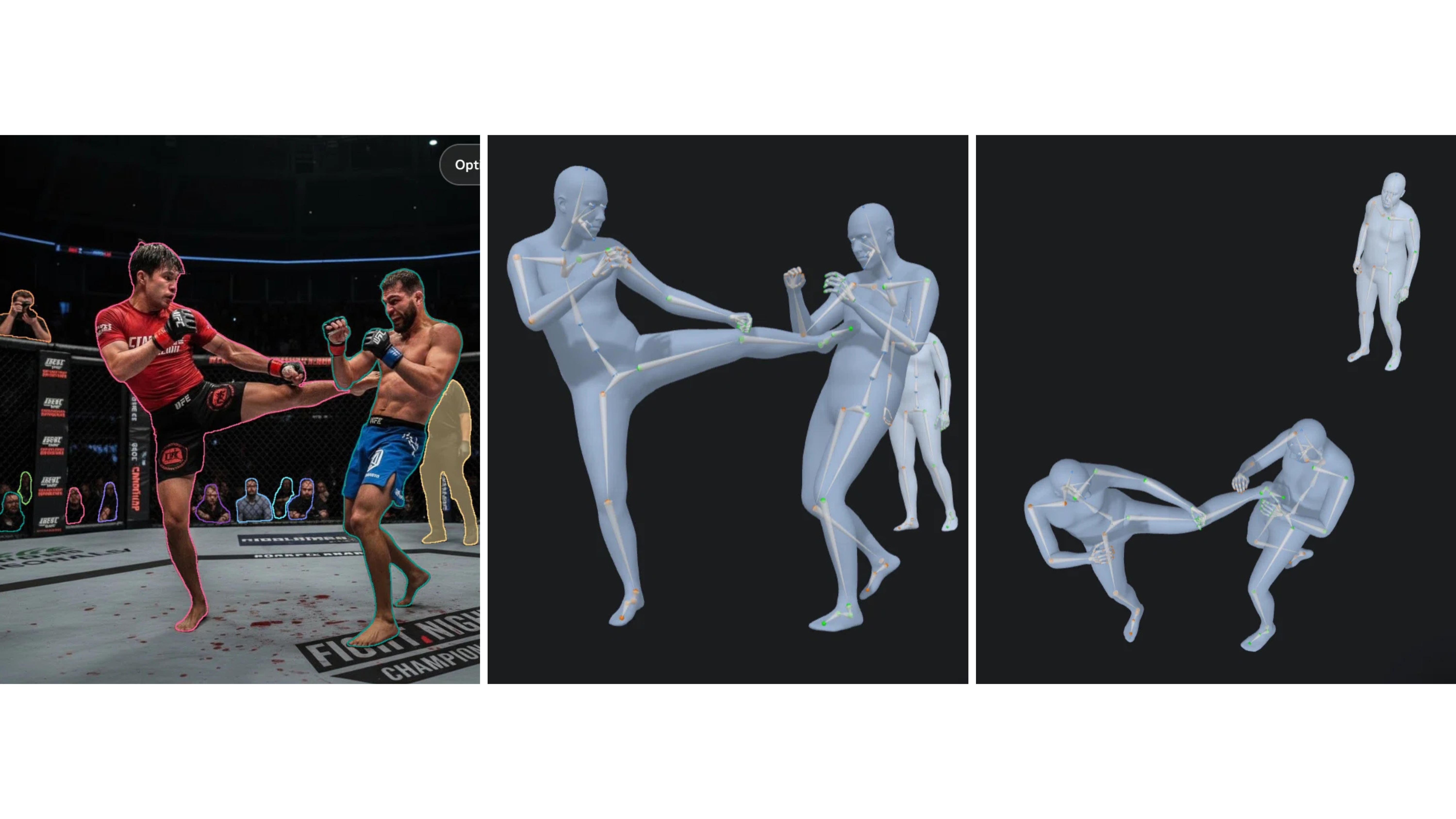

SAM 3D Body

Reconstructs accurate full-body human shapes using Meta’s new Momentum Human Representation (MHR).

Key Innovations

- Single-image 3D reconstruction, a major leap beyond traditional scanning.

- Robust handling of occlusion thanks to learned “common-sense” priors.

- High-quality meshes & textures ready for AR/VR, games, and simulations.

- Human-refined outputs for more natural proportions and realism.

- Near-real-time generation, enabling one-click 3D creation for everyone.

How It Works

SAM 3D combines a Vision Transformer encoder, Segment Anything for object selection, depth and geometry predictors, and a fast Gaussian splatting renderer to produce complete 3D assets in seconds, all from a single image and without 3D expertise.

Real-World Applications

AR/VR & Digital Content

Instantly convert product photos or reference images into usable 3D assets for immersive experiences.

Robotics & Autonomous Systems

Provide depth and shape understanding from a single camera frame, improving navigation and object interaction.

Healthcare & Sports Analysis

Generate 3D human body approximations for posture analysis, rehabilitation, or athletic performance insights.

Gaming & Creative Workflows

Speed up asset creation, turn concept art or real-world photos into base 3D models for rapid prototyping.

E-Commerce Visualization

Enable 3D product previews or AR placement tools, enhancing online shopping engagement and conversion.

How SAM 3D Stands Out

Compared with traditional 3D scanning, SAM 3D is dramatically faster and more accessible.

Compared with earlier AI-based 3D models (e.g., Point·E, Shap·E, NeRF variants), SAM 3D delivers higher fidelity, fully textured results, and requires only a single image.

Its open-source release makes it both powerful and practical for developers and enterprises.

Why This Matters

SAM 3D represents a major step toward democratising 3D creation. As 2D and 3D content pipelines continue to merge, tools like SAM 3D will define the next generation of digital experiences, interactive, spatial, and highly immersive.

SAM 3D aligns strongly with our mission to help companies adopt next-generation AI and spatial computing technologies.

Whether integrating 3D asset automation, enhancing AR/VR experiences, improving visual workflows, or enabling product visualisation, we support organisations in turning breakthroughs like SAM 3D into real business value.

If your team is exploring 3D innovation, computer vision solutions, or AI-driven content generation, we’re ready to help you build what comes next.